Activates the Svc_Handler, and then if specified by the TAO_Server_Strategy_Factory, it activates the Svc_Handler to run in its own thread. More...

#include <Acceptor_Impl.h>

Public Member Functions | |

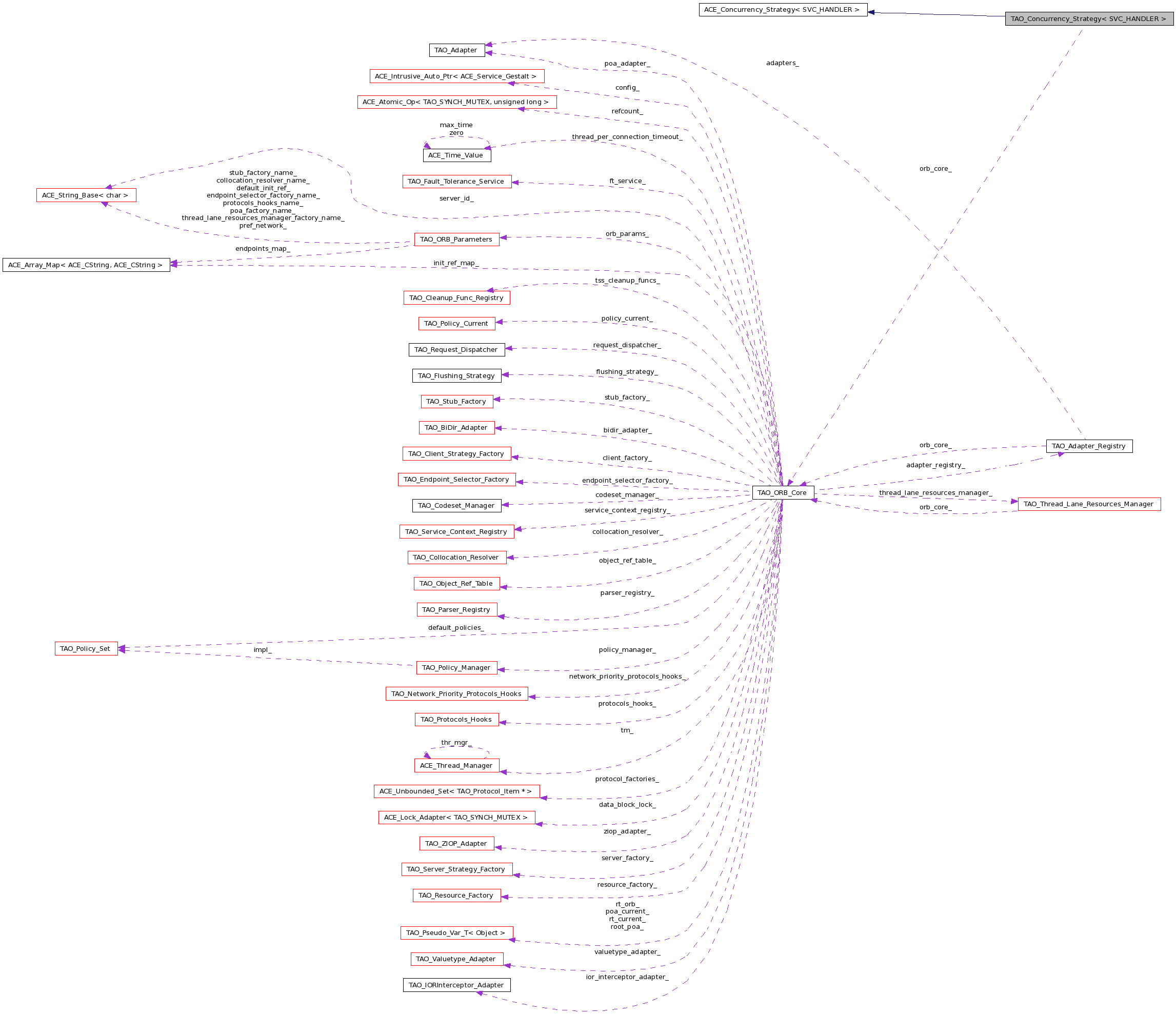

| TAO_Concurrency_Strategy (TAO_ORB_Core *orb_core) | |

| Constructor. | |

| int | activate_svc_handler (SVC_HANDLER *svc_handler, void *arg) |

Protected Attributes | |

| TAO_ORB_Core * | orb_core_ |

| Pointer to the ORB Core. | |

Activates the Svc_Handler, and then if specified by the TAO_Server_Strategy_Factory, it activates the Svc_Handler to run in its own thread.

Definition at line 62 of file Acceptor_Impl.h.

| TAO_Concurrency_Strategy< SVC_HANDLER >::TAO_Concurrency_Strategy | ( | TAO_ORB_Core * | orb_core | ) |

| int TAO_Concurrency_Strategy< SVC_HANDLER >::activate_svc_handler | ( | SVC_HANDLER * | svc_handler, | |

| void * | arg | |||

| ) | [virtual] |

Activates the Svc_Handler, and then if specified by the TAO_Server_Strategy_Factory, it activates the Svc_Handler to run in its own thread.

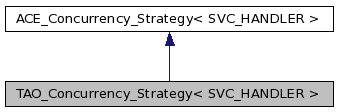

Reimplemented from ACE_Concurrency_Strategy< SVC_HANDLER >.

Definition at line 67 of file Acceptor_Impl.cpp.

{

sh->transport ()->opened_as (TAO::TAO_SERVER_ROLE);

// Indicate that this transport was opened in the server role

if (TAO_debug_level > 6)

ACE_DEBUG ((LM_DEBUG,

"TAO (%P|%t) - Concurrency_Strategy::activate_svc_handler, "

"opened as TAO_SERVER_ROLE\n"));

// Here the service handler has been created and the new connection

// has been accepted. #REFCOUNT# is one at this point.

if (this->ACE_Concurrency_Strategy<SVC_HANDLER>::activate_svc_handler (sh,

arg) == -1)

{

// Activation fails, decrease reference.

sh->transport ()->remove_reference ();

// #REFCOUNT# is zero at this point.

return -1;

}

// The service handler has been activated. Now cache the handler.

if (sh->add_transport_to_cache () == -1)

{

// Adding to the cache fails, close the handler.

sh->close ();

// close() doesn't really decrease reference.

sh->transport ()->remove_reference ();

// #REFCOUNT# is zero at this point.

if (TAO_debug_level > 0)

{

ACE_ERROR ((LM_ERROR,

ACE_TEXT ("TAO (%P|%t) - Concurrency_Strategy::activate_svc_handler, ")

ACE_TEXT ("could not add the handler to cache\n")));

}

return -1;

}

// Registration with cache is successful, #REFCOUNT# is two at this

// point.

TAO_Server_Strategy_Factory *f =

this->orb_core_->server_factory ();

int result = 0;

// Do we need to create threads?

if (f->activate_server_connections ())

{

// Thread-per-connection concurrency model

TAO_Thread_Per_Connection_Handler *tpch = 0;

ACE_NEW_RETURN (tpch,

TAO_Thread_Per_Connection_Handler (sh,

this->orb_core_),

-1);

result =

tpch->activate (f->server_connection_thread_flags (),

f->server_connection_thread_count ());

}

else

{

// Otherwise, it is the reactive concurrency model. We may want

// to register ourselves with the reactor. Call the register

// handler on the transport.

result = sh->transport ()->register_handler ();

}

if (result != -1)

{

// Activation/registration successful: the handler has been

// registered with either the Reactor or the

// Thread-per-Connection_Handler, and the Transport Cache.

// #REFCOUNT# is three at this point.

// We can let go of our reference.

sh->transport ()->remove_reference ();

}

else

{

// Activation/registration failure. #REFCOUNT# is two at this

// point.

// Remove from cache.

sh->transport ()->purge_entry ();

// #REFCOUNT# is one at this point.

// Close handler.

sh->close ();

// close() doesn't really decrease reference.

sh->transport ()->remove_reference ();

// #REFCOUNT# is zero at this point.

if (TAO_debug_level > 0)

{

const ACE_TCHAR *error = 0;

if (f->activate_server_connections ())

error = ACE_TEXT("could not activate new connection");

else

error = ACE_TEXT("could not register new connection in the reactor");

ACE_ERROR ((LM_ERROR,

ACE_TEXT("TAO (%P|%t) - Concurrency_Strategy::activate_svc_handler, ")

ACE_TEXT("%s\n"), error));

}

return -1;

}

// Success: #REFCOUNT# is two at this point.

return result;

}

TAO_ORB_Core* TAO_Concurrency_Strategy< SVC_HANDLER >::orb_core_ [protected] |

Pointer to the ORB Core.

Definition at line 78 of file Acceptor_Impl.h.

1.7.0

1.7.0